Enhancing Operator Efficiency: Sage Shop Floor Redesign

MES Light / Shop Floor serves as the critical execution layer for Sage Operations, bridging the gap between ERP planning and shop-floor reality. It transforms rugged, shared kiosks from friction points into accurate data capture tools—ensuring that operator actions translate instantly into reliable OEE metrics.

This case study covers two phases:

- 2022 (0→1 MVP): replaced paper/Excel reporting with a minimum viable digital execution loop.

- 2023 (Shared kiosk revamp): remapped the MVP logic and information architecture to match real shop-floor constraints (multi-operator, frequent handovers, auditable time logs).

My role: Research + Lead UXUI + Prototyper with AI-assisted Development. I drove the end-to-end design (Field studies → problem framing → IA → interaction → UI) and aligned with engineering on technical constraints (state model, timers, edge cases).

To comply with my non-disclosure agreement, I have omitted and obfuscated confidential information in this case study. All information in this case study is my own and does not necessarily reflect the views of Sage.

On the shop floor, the same execution data powers two worlds:

- Operators need a fast, low-friction experience to run work orders under pressure (gloves-on, standing, noisy environment).

- Supervisors/managers need trustworthy data (progress, downtime reasons, time logs) to drive KPIs (e.g., OEE/TRS) and continuous improvement.

The biggest constraint in this environment: the device is often a shared kiosk fixed to a station—the station owns the device, not the individual operator.

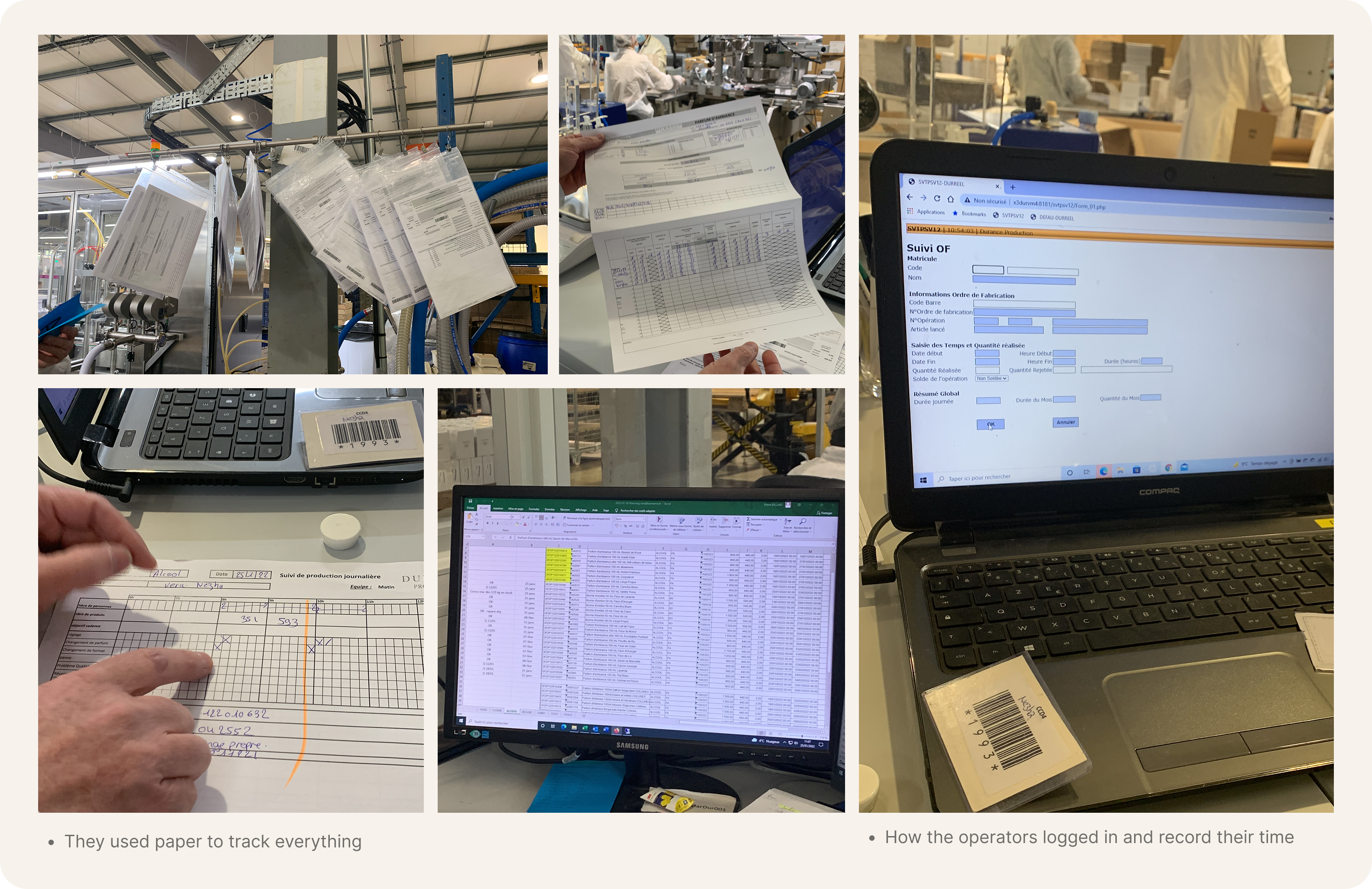

I conducted field studies at 3 client factories (one day each), shadowing operators during their shifts. I observed that shop-floor reporting was largely done via paper forms and Excel:

- updates and downtime reasons were often captured after the fact

- records were inconsistent and hard to audit

- handovers relied on informal notes and verbal updates

"I can't remember which orders I already logged—I just write everything down at the end of my shift."

— Machine Operator

"When the shift changes, I have to explain everything verbally to the next person. Sometimes things get lost."

— Line Supervisor

This created a gap between what was happening on the line and what systems and KPIs suggested was happening.

Without reliable, real-time execution data, the business faced:

- Untrustworthy KPIs — loss breakdowns and downtime categories were incomplete/inconsistent

- Planning & delivery risk — WO status drifted from reality, affecting scheduling and commitments

- Traceability cost — downtime, adjustments, and ownership were hard to prove, audit, or improve

In short: if shop-floor data isn’t timely and consistent, operational decisions and KPI-driven improvements become unreliable.

In a high-frequency shop-floor environment, operators needed a workflow that was fast, unambiguous, and interruption-resilient, but they struggled with:

- High reporting friction → missed entries and errors from backfilling

-

Multi-operator handovers on a shared kiosk:

- machine runtime must remain continuous when production continues

- personal operator time must be attributed correctly (accountability/payroll)

- pauses must capture a reason for traceability and analytics

- UI patterns not optimized for kiosk reality — too much typing, too many screens, too easy to mis-tap

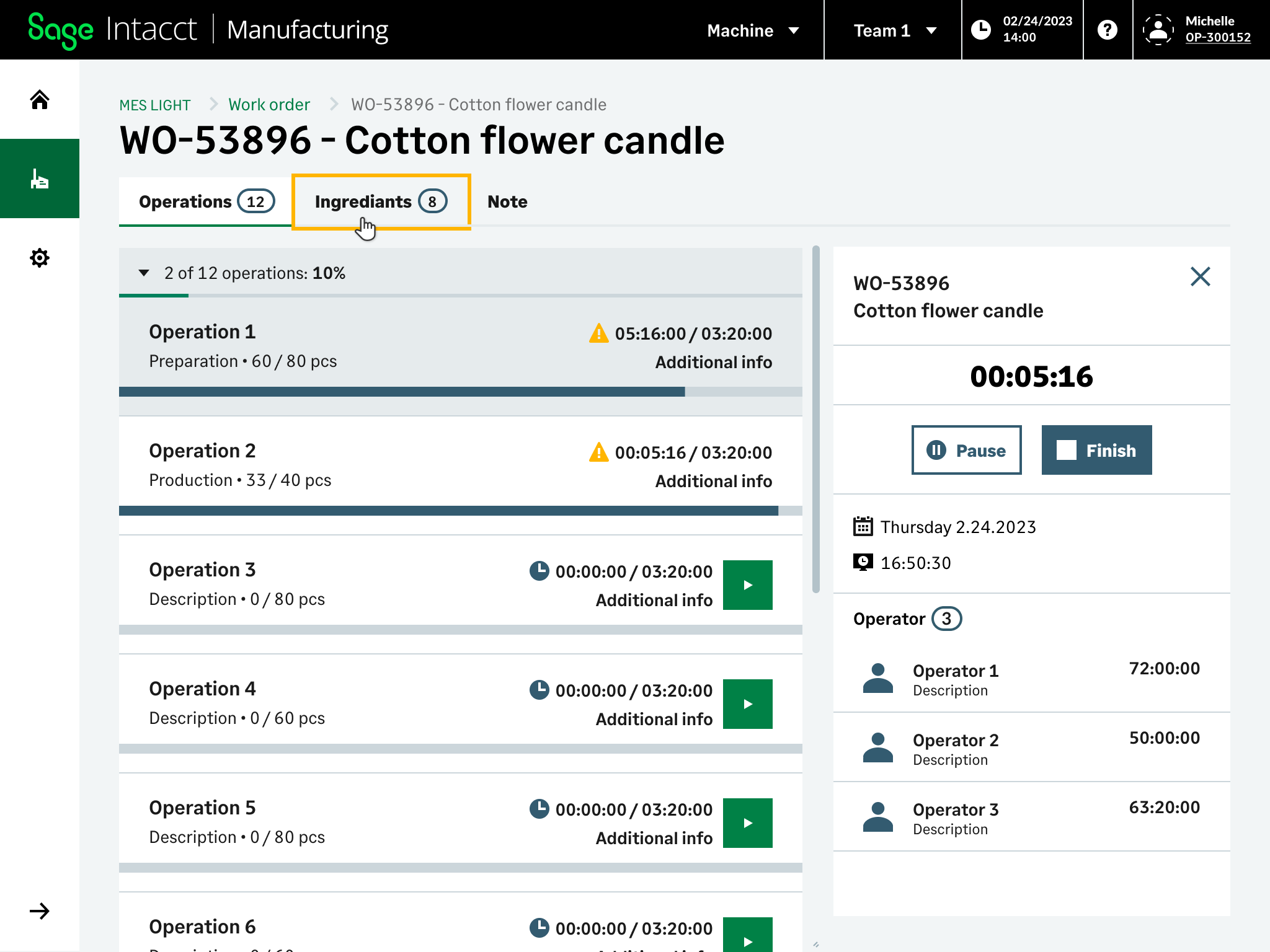

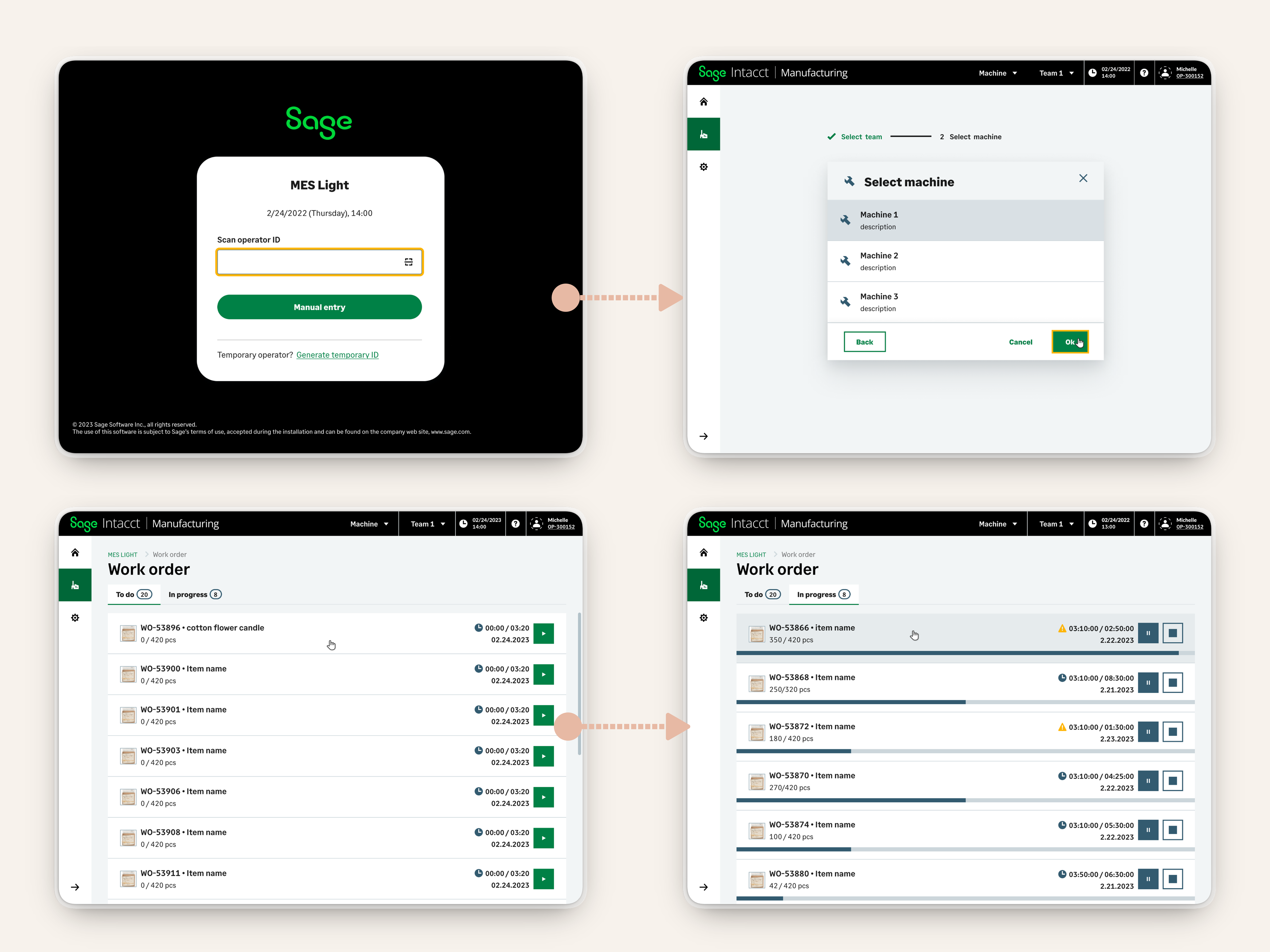

In 2022, I designed and delivered the first MVP to replace paper/Excel with a workable digital execution loop:

- Select Machine and Team/Individual after login

- station queue (To Do / In Progress)

- core actions (Start / Pause / Finish)

- initial reason capture for interruptions and traceable logs

Intent: ship a “first usable system” that operators could realistically run on the line.

The 2022 MVP established the foundation, but real usage patterns in 2023 revealed a different product reality: the app was increasingly used as a station-bound shared kiosk.

That changes the design problem fundamentally:

- the device belongs to a station, not a person

- operators rotate frequently (shift change, breaks, temporary staff)

- a “simple login” model doesn’t guarantee accurate personal time logs

- handover becomes a data integrity risk, not just a UX moment

2023 focus: remap the MVP logic and IA to shared usage without breaking runtime continuity or traceability.

2023 Design Goals

- Station-first experience (reduce wrong context / wrong machine usage)

- Support frequent handover without stopping production

- Keep machine runtime and operator time logs accurate and auditable

- Reduce cognitive load with a calm, glanceable UI optimized for gloves

Two clocks (separating “machine time” from “human time”)

- Machine/Step runtime: continuous if production continues (supports KPI and loss analysis)

- Operator time log: recorded as step-level operator sessions (start/end + pause events)

Two handovers (because handover has two operational intents)

- Hot handover: switch operator without stopping machine/runtime

- Cold handover: stop/pause with a required reason (end shift, material shortage, QC hold, failure…)

In kiosk mode, the safest IA is “station context first”:

Context (Assigned by Supervisor):

- Team / Individual (if applicable)

- Machine / Line

Operator Workflow:

- View Station queue (To Do / In Progress)

- Open a Work Order scoped to that station/operation

- Execute via Steps

“Kiosk entry is station-first: pick the line before viewing work.”

“Queue is scoped to the station to reduce noise and wrong starts.”

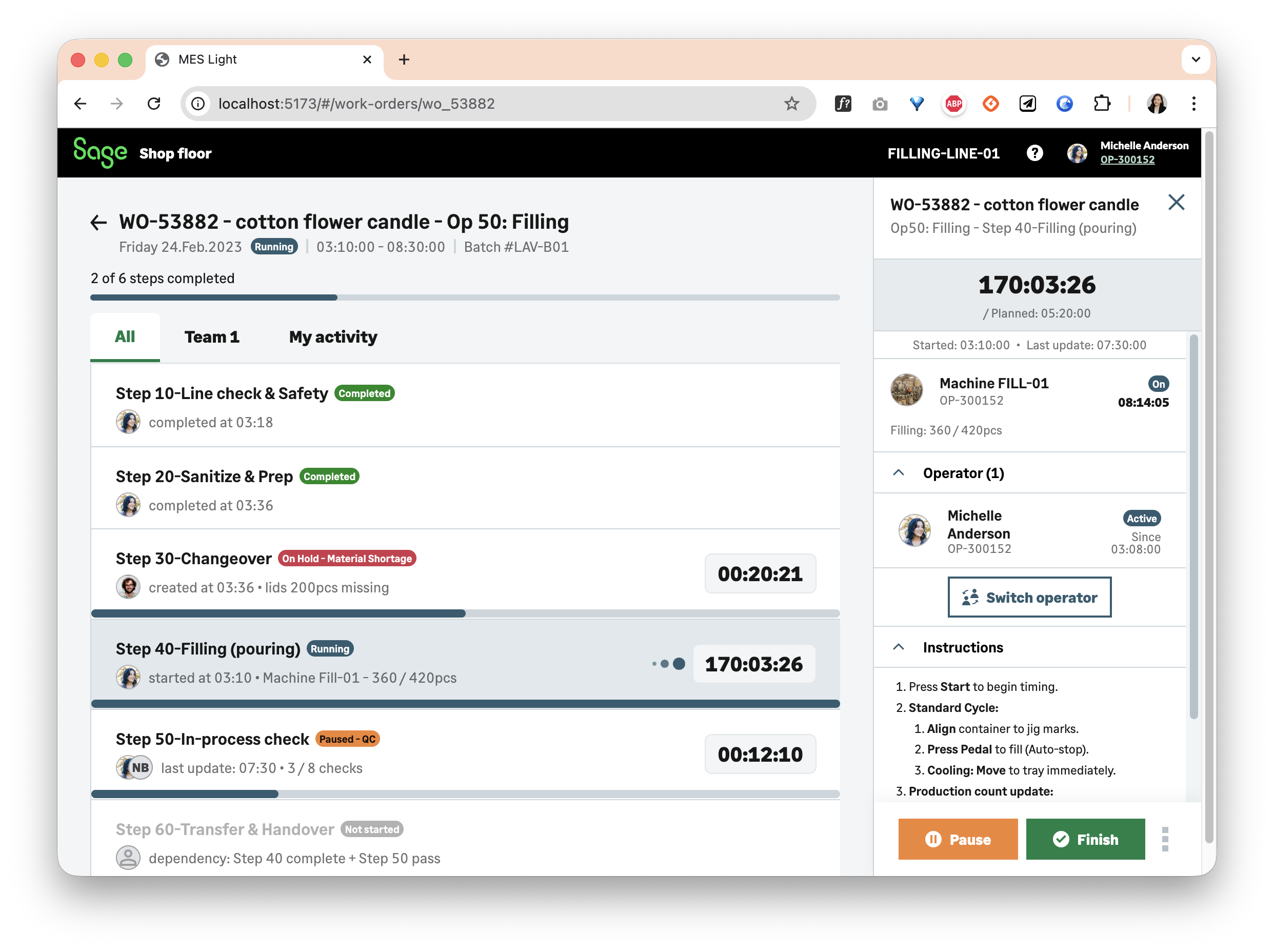

The main execution screen intentionally keeps the operator in one stable workspace:

- Left: Step cards (sequence + context)

- Right: Step detail panel (details, instructions, and actions for the selected step)

The panel is step-level (not station-level): selecting a step card updates the panel with that step’s content.

“Actions are always bound to a specific step to avoid ambiguity.”

“Instructions live next to actions, reducing navigation and context switching.”

Decision A — Station-first IA

The ChallengeI initially designed a user-first IA where operators would log in and see "their" tasks across all machines. But field observations revealed that operators often forgot which machine they were logged into, causing data to be attributed to the wrong station.

My PivotI proposed flipping the hierarchy: station context first, then tasks. I presented field study evidence showing that shared kiosks are station-owned, not person-owned.

OutcomeFaster starts + fewer wrong selections. Operators no longer accidentally logged time to the wrong machine.

Decision B — Step-level control

The ChallengeThe 2022 MVP had actions at the work-order level. But in UAT, operators paused the wrong step because they couldn't tell which step the action applied to.

My SolutionI redesigned the UI so that Pause/Finish actions are bound to a specific step card. Trade-off: one extra tap to select a step.

OutcomeFewer mis-operations and clearer ownership. Operators said: "Now I know exactly what I'm pausing."

Decision C — Operator sessions ("Two Clocks")

The ChallengePayroll disputes arose because managers couldn't prove which operator worked which hours on a shared kiosk. The existing log only tracked machine time, not human time.

My FrameworkI designed the "Two Clocks" model: machine runtime continues uninterrupted, while operator sessions are logged separately with start/end timestamps.

OutcomeAccurate attribution + auditable logs + fewer disputes. The model was validated by supervisors as "finally, we can see who did what."

Decision D — Hot vs Cold handover

The ChallengeIn the 2022 MVP, any operator change stopped the machine timer. But real production lines need to switch operators mid-run without losing continuity.

My SolutionI introduced two explicit handover types: Hot (switch operator, keep running) and Cold (pause with reason). Trade-off: two concepts to teach.

OutcomeProtects continuity and makes intent auditable. Operators learned the distinction quickly during UAT.

Decision E — Timers only where meaningful

The ChallengeEarly prototypes showed timers on every step. But operators felt "watched" on manual steps where time variance is expected, leading to distrust of the system.

My AdaptationI worked with the PM to define which step types deserve precision timing (machine cycles) vs. which don't (manual assembly). Less granularity on some manual steps.

OutcomeHigher trust and calmer UI. Operators stopped complaining about "being timed all the time."

Hot Handover — Switch operator (machine keeps running)

- Trigger: “Switch operator”

- UI: modal overlay (no page change; preserves context)

-

Result:

- machine/step runtime continues

- current operator session ends; a new session starts

- responsibility stays clear without stopping production

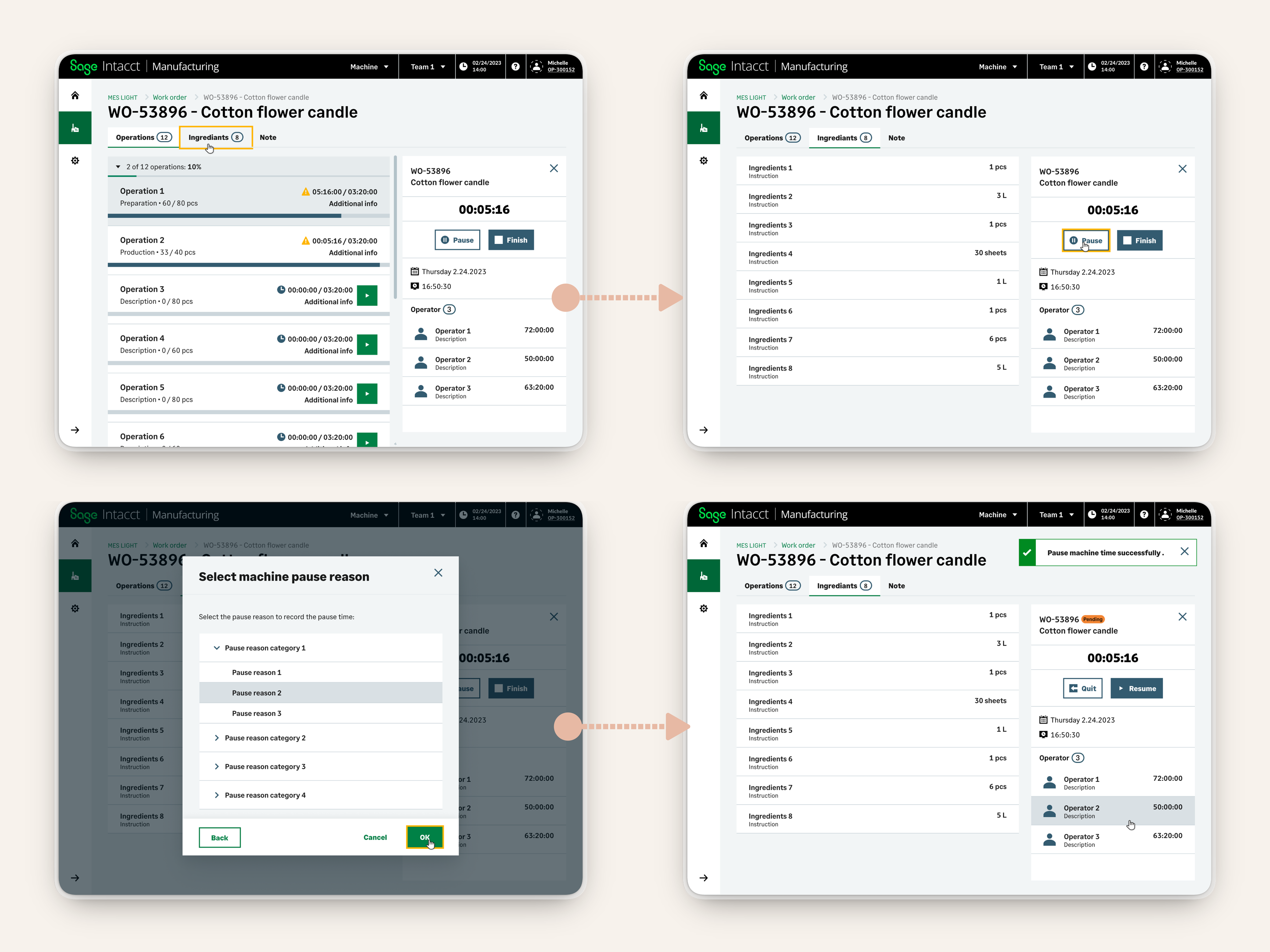

Cold Handover — Pause with reason (machine stops)

- Trigger: “Pause”

- UI: select reason (category → reason), required for traceability

-

Result:

- machine/step state changes (Paused / Hold)

- reason is logged for analytics/audit

- optional end-shift path returns kiosk to identify screen

I designed and led UAT sessions with 5 lead operators, validating the effective adoption of the new "Two Clocks" model. By shifting from a "reporting" tool to a "station-first" workspace, I achieved:

0

critical path errors

in UAT sessions

5

lead operators

validated the design

100%

handover success

hot/cold without runtime stop

1. Validated Usability Wins (UAT)

- Zero critical path errors: Operators successfully performed hot/cold handovers without stopping the machine runtime.

- Removed cognitive load: "Station-first" entry eliminated the risk of logging data against the wrong line.

"This is so much clearer than before. I don't have to think about which machine I'm on anymore." — Lead Operator (UAT feedback)

2. Enabled Business Capabilities (Strategic Impact)

Instead of relying on manual corrections, the new system architecture enforces data integrity by design:

- Traceability: Pauses now require reason codes, unlocking granular loss analysis for the first time.

- Auditable Accountability: The "Two Clocks" model successfully decouples machine runtime from operator shifts, enabling accuracy sufficient for payroll attribution and audit compliance.

- Data Trust: By preventing ambiguous states (e.g., "who is working on this?"), I reduced the downstream administrative burden of fixing time logs.

Note: Quantitative adoption metrics are not yet available as the 2023 revamp is in staged rollout.

- Handover is a data integrity feature, not just UX. This project taught me that in shared-device environments, handover logic must be designed as a first-class workflow. I now approach multi-user systems by asking "what happens when users switch?" from day one.

- Reduce decisions to reduce errors. The pivot to station-first IA was counterintuitive—I thought operators wanted more flexibility. Field research proved me wrong. This reinforced my belief that good UX often means removing options, not adding them.

- Separating "machine time" from "human time" unlocked trust. The "Two Clocks" model was my proposal to solve payroll disputes. Its success taught me that domain-specific mental models (like "two clocks") can be more powerful than generic patterns.

- Not everything worth measuring should be measured. I initially wanted timers everywhere. Operators pushed back. Learning to resist "data completeness" in favor of "data trust" was a key growth moment for me.